June 2025

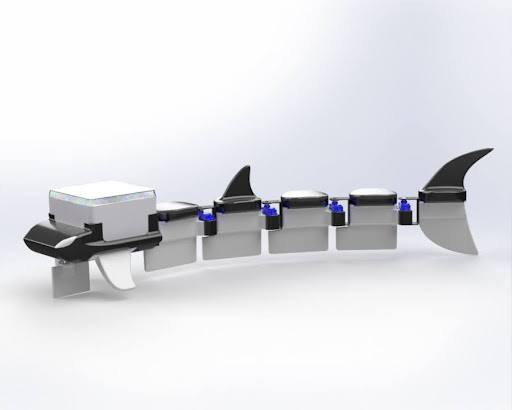

A robotic fish platform that investigates the optimization of aquatic propulsion through bio-inspired fishtail locomotion, featuring modular tail segments and controllable swimming patterns.

May 2025

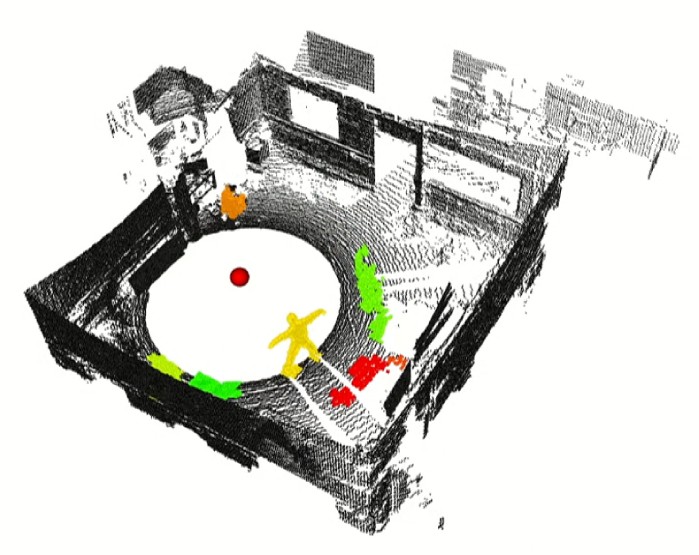

A lightweight architecture for dynamic object segmentation in 3D point clouds, optimizing for human detection with reduced model parameters while maintaining high accuracy.

April 2025

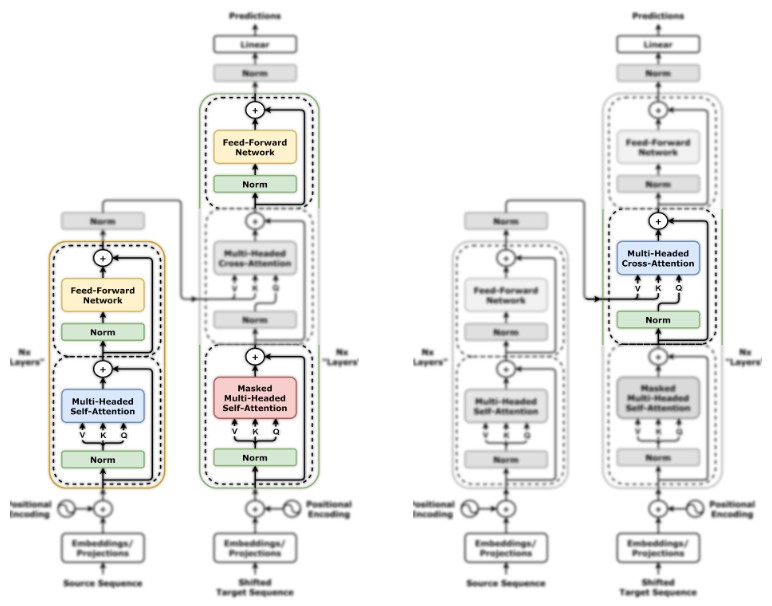

Development of advanced sequence models using recurrent neural networks for text generation on WikiText-2 and speech-to-text transcription systems with attention mechanisms on the LibriSpeech corpus.

April 2025

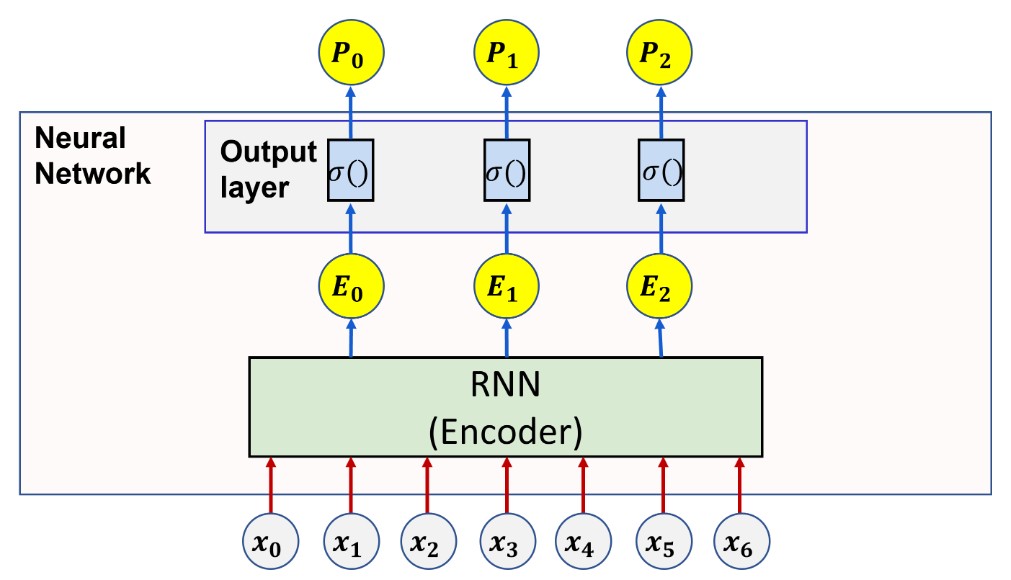

An end-to-end Automatic Speech Recognition (ASR) system built with pyramidal bidirectional LSTM and Connectionist Temporal Classification, achieving high accuracy in phoneme prediction from unaligned speech data.

February 2025

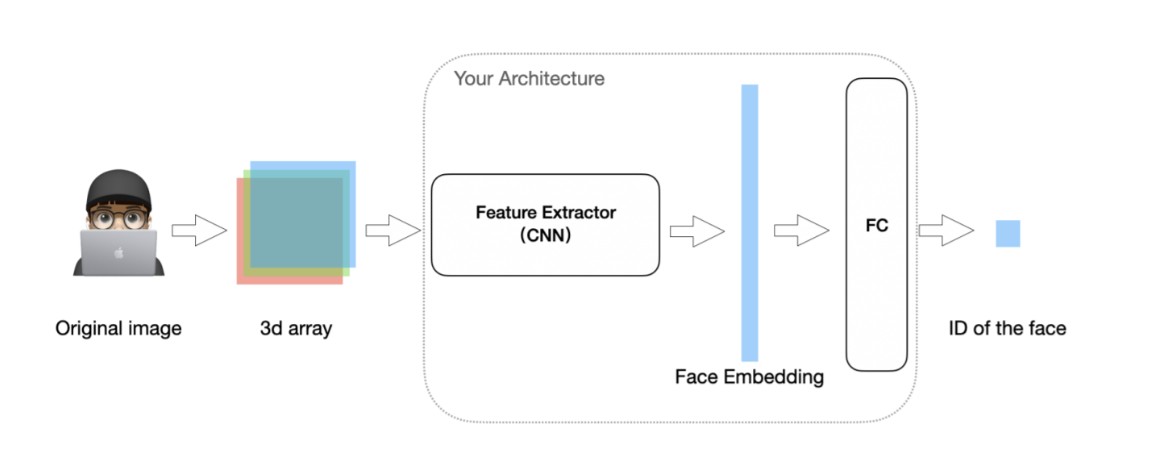

A deep learning model to extract discriminative facial features

February 2025

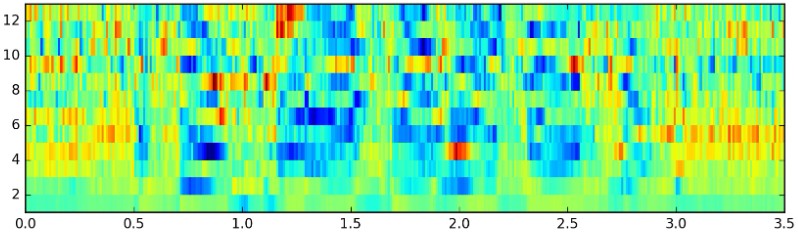

A deep learning system that recognizes phoneme states from speech recordings, implementing advanced neural network architectures and achieving 86% accuracy on frame-level speech classification.

April 2024

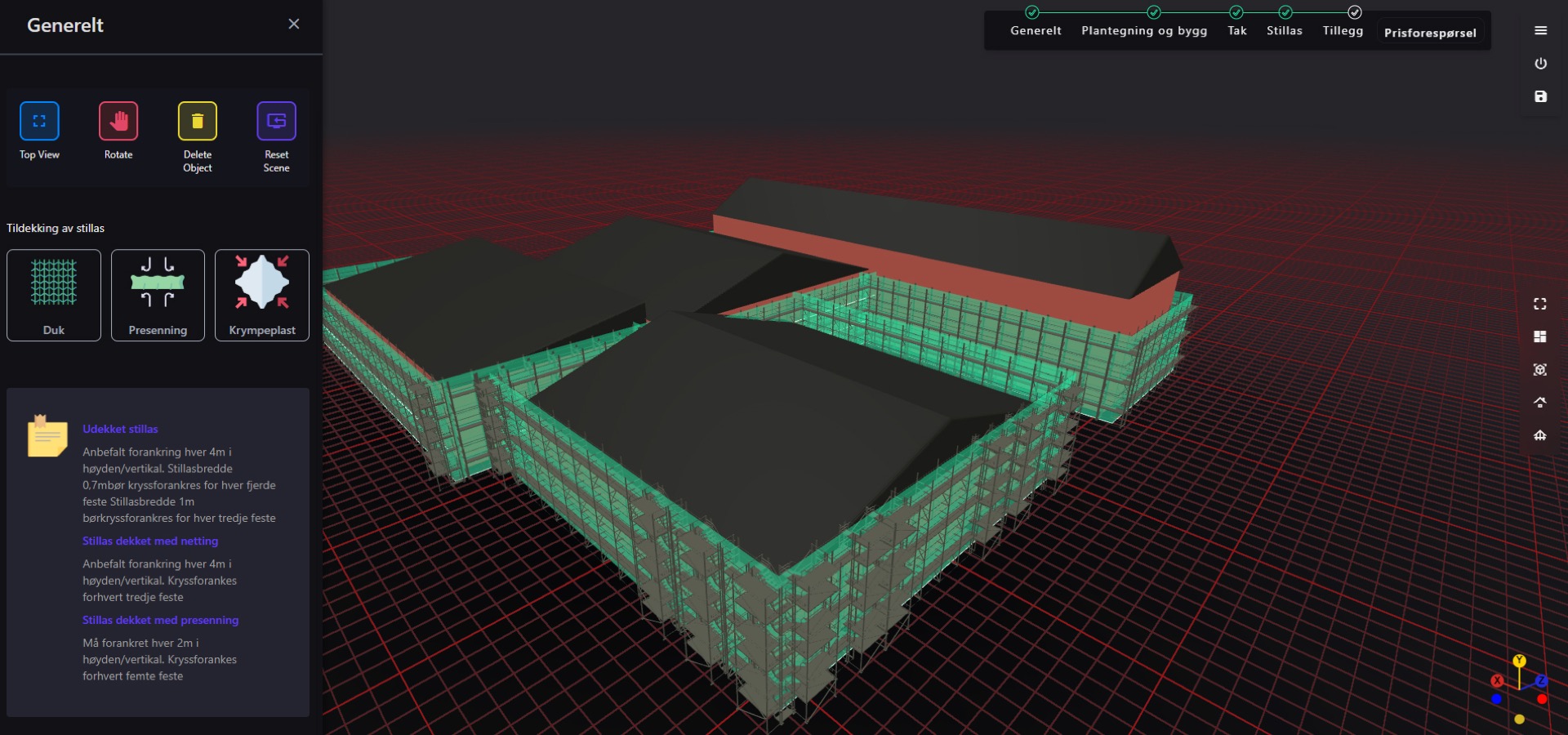

A high-performance 3D application for scaffolding graphics, utilizing WebGPU and ifc.js for real-time visualization and automated Bill of Materials (BOM) generation.

December 2023

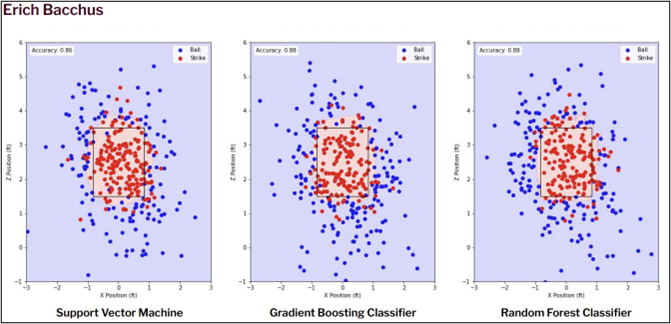

A comprehensive baseball analytics platform that uses machine learning to analyze player performance and predict game outcomes.

April 2023

A bio-inspired walking robot based on Theo Jansen's Strandbeest mechanism, featuring advanced control systems and autonomous capabilities.

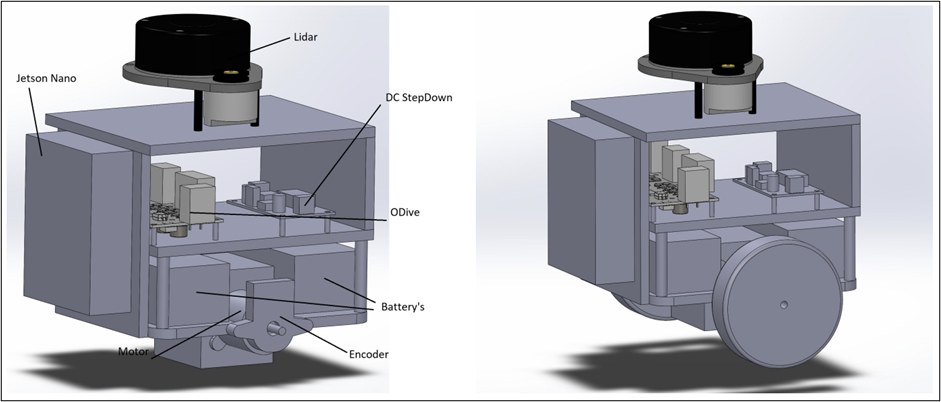

January 2023

An advanced robotic system capable of autonomously navigating complex mazes using LiDAR technology and sophisticated control algorithms.

July 2022

A ThreeJS sanbox designed to experiment with shaders, audio visualizations, geometries, and proceddural generation in the browser